11.2 The Evolution of the Internet

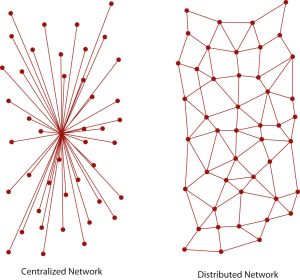

From its early days as a military-only network to its current status as one of the developed world’s primary sources of information and communication, the Internet has come a long way in a short period. Yet two elements have stayed constant and provide a coherent thread for examining the origins of the now-pervasive medium. First, the Internet can trace its need for persistence to the influences of the Cold War, which necessitated its design as a decentralized, indestructible communication network.

Second, the development of rules of communication for computers, known as protocols, was not a solitary endeavor. Computer scientists, in a spirit of consensus and collaboration, developed these rules to facilitate and control online communication, shaping how the Internet works. For instance, users frequently engage with the protocol on Facebook. They can easily communicate with one another, but only through acceptance of protocols that include wall posts, comments, and messages. Facebook’s protocols enable communication and govern its operation. The need for persistence and development of computer protocols has bridged the Internet’s origin to its present-day incarnation, with the audience playing a crucial role in this evolution. Through consensus, these protocols facilitate and control online communication, shaping how the Internet works. For instance, users frequently engage with the protocol on Facebook. They can easily communicate with one another, but only through acceptance of protocols that include wall posts, comments, and messages. Facebook’s protocols enable communication and govern its operation. The need for persistence and development of computer rules has bridged the Internet’s origin to its present-day incarnation.

The History of the Internet

The near indestructibility of information on the Internet derives from a military principle used in secure voice transmission: decentralization. In the early 1970s, the RAND Corporation developed a technology that enabled users to send secure voice messages. This technology, known as ‘packet switching,’ is similar to sending a letter in pieces instead of all at once. In contrast to a system known as the hub-and-spoke model, where the telephone operator (the ‘hub’) would patch two people (the ‘spokes’) through directly, this new system allowed them to send a voice message through an entire network, or web, of carrier lines, without the need to travel through a central hub, allowing for many different possible paths to the destination.

During the Cold War, the U.S. military grew concerned about a nuclear attack destroying the hub in its hub-and-spoke model. With this new web-like model, a secure voice transmission would be more likely to endure a large-scale attack. A web of data pathways could still transmit secure voice “packets,” even if a few of the nodes—places where the web of connections intersected—got destroyed. Only through the destruction of all the nodes in the web could the data traveling along it disappear entirely—an unlikely event in the case of a highly decentralized network.

This decentralized network could only function through standard communication protocols. Just as individuals use specific protocols when communicating over a telephone—“hello,” “goodbye,” and “hold on for a minute”—any machine-to-machine communication must also use protocols. These protocols constitute a shared language that enables computers to understand each other clearly and easily. Unlike the English language, where both nonverbal communication and context help parties understand the meaning of polysemous words like “rat” and “watch”, computer language excludes such nuances.

The Building Blocks of the Internet

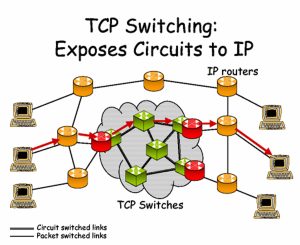

In 1973, the U.S. Defense Advanced Research Projects Agency (DARPA) initiated research on protocols that would enable computers to communicate over a distributed network. This work paralleled work done by the RAND Corporation, particularly in the realm of a web-based network model of communication. Instead of using electronic signals to send an unending stream of ones and zeros over a line (the equivalent of a direct voice connection), DARPA used this new packet-switching technology to send small bundles of data. Instead of sending a message as an unbroken stream of binary data—extremely vulnerable to errors and corruption—they could instead package it as only a few hundred numbers.

Imagine a telephone conversation in which any static in the signal would make the message incomprehensible. Whereas humans can infer meaning from “Meet me [static] the restaurant at 8:30”, computers do not necessarily have that logical linguistic capability to infer the missing word “at.” To a computer, this constant stream of data arrived incomplete-or “corrupted,” in technological terminology—and confusing. Considering the susceptibility of electronic communication to noise or other forms of disruption, it would seem that computer-to-computer transmission would prove nearly impossible.

However, the packets in this packet-switching technology contain information that allows the receiving computer to verify the packet has arrived uncorrupted. Because of this new technology and the shared protocols that enabled computer-to-computer transmission, a single large message could be broken into multiple pieces and sent through an entire web of connections, speeding up the transmission and making it more secure.

This requires the network to have a host, or physical node, that directly connects to the Internet and ‘directs traffic’ by routing packets of data to and from other computers connected to it. An Internet protocol, or IP, address is like a unique home address for each computer on the Internet. Each unique IP address refers to a single location on the global Internet, but it can serve as a gateway for multiple computers. For example, a college campus may have a single global IP address for all its students’ computers, and each student’s computer may then have its local IP address on the school’s network. This nested structure allows billions of different global hosts, each with any number of computers connected within their internal networks. Think of a campus postal system: All students share the same global address (1000 College Drive, Anywhere, VT 08759, for example), but they each have a private internal mailbox within that system.

The military called the early Internet ARPANET, after the U.S. Advanced Research Projects Agency (which added “Defense” to its name and became DARPA in 1973), and consisted of just four hosts located at educational institutions: UCLA, Stanford, UC Santa Barbara, and the University of Utah. Now, the Internet has grown exponentially, exceeding more than half a million hosts, and each of those hosts likely serves thousands of people (Central Intelligence Agency). This staggering growth is a testament to the power of technology and human innovation. Each host uses protocols to connect to an ever-growing network of computers. Because of this, the Internet does not exist in any one particular place; rather, it represents a vast network of interconnected computers that collectively form the entity known as the Internet. The Internet has no physical structure; it exists as a series of protocols that enable this type of mass communication.

The Transmission Control Protocol (TCP) gateway is one of the core components of the Internet. As proposed in a 1974 paper, the TCP gateway acts “like a postal service (Cerf et. al., 1974).” Without knowing a specific physical address, any computer on the network can ask for the owner of any IP address, and the TCP gateway will consult its directory of IP address listings to determine precisely which computer the requester has attempted to contact. The development of this technology was an essential building block in the interlinking of networks, as computers could now communicate with each other without knowing the specific address of a recipient; the TCP gateway would handle the details. Additionally, the TCP gateway verifies for errors and ensures that data reaches its destination intact. Today, this combination of TCP gateways and IP addresses gets referred to as TCP/IP and essentially operates like a worldwide phone book for every host on the Internet.

You’ve Got Mail: The Beginnings of the Electronic Mailbox

E-mail has, in one sense or another, been around for quite a while. Initially, people would record electronic messages within a single mainframe computer system. Each person working on the computer would have a personal folder, so sending that person a message required nothing more than creating a new document in that person’s folder, much like leaving a note on someone’s desk (Peter, 2004), so that the person would see it when they logged onto the computer.

However, once networks began to develop, things became slightly more complicated. Computer programmer Ray Tomlinson invented the naming system used today, designating the @ symbol to denote the server (or host, as discussed in the previous section). In other words, name@gmail.com tells the host “gmail.com” (Google’s e-mail server) to drop the message into the folder belonging to “name.” Tomlinson wrote the first network email using his SNDMSG program in 1971. This invention of a simple standard for e-mail represented a critical factor in the rapid spread of the Internet and remains one of the most widely used Internet services.

The use of email grew in large part because of later commercial developments, especially America Online, which made connecting to email much easier than previous iterations. Internet service providers (ISPs) packaged e-mail accounts with Internet access, and almost all web browsers (such as Netscape, discussed later in the section) included a form of e-mail service. In addition to the ISPs, email services like Hotmail and Yahoo! Mail provided free email addresses, paid for by small text ads at the bottom of every email message sent. These free “webmail” services soon expanded to comprise a significant portion of the email services available today. Far from the original maximum inbox sizes of a few megabytes, today’s e-mail services, like Google’s Gmail service, generally provide gigabytes of free storage space.

E-mail has revolutionized written communication. The speed and relatively inexpensive nature of e-mail make it a prime competitor of postal services—including FedEx and UPS—that pride themselves on speed. Communicating via email with someone on the other side of the world incurs the exact cost as communicating with a next-door neighbor. However, the growth of Internet shopping and online companies such as Amazon has, in many ways, made the postal service and shipping companies more prominent, not necessarily for communication, but for delivery and remote business operations.

Hypertext: Web 1.0

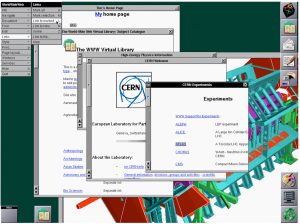

In 1989, Tim Berners-Lee, a graduate of Oxford University and a software engineer at CERN (the European particle physics laboratory), conceived the idea of using a new protocol to share documents and information throughout the local CERN network. Instead of transferring regular text-based documents, he created a new language called Hypertext Markup Language (HTML). The latest word, hypertext, describes text that extends beyond the boundaries of a single document. Hypertext can include links to other documents (hyperlinks), text-style formatting, images, and a wide variety of components.

This new language required a new communication protocol so that computers could interpret it; Berners-Lee decided on the name Hypertext Transfer Protocol (HTTP). Through HTTP, users can send hypertext documents from one computer to another and have them interpreted by a browser, which converts the HTML files into readable web pages. The browser that Berners-Lee created, called the World Wide Web, acted as a combination browser and editor, allowing users to view other HTML documents and create their own (Berners-Lee, 2009).

Modern browsers, like Google Chrome and Mozilla Firefox, only allow for the viewing of web pages (though both offer plugins to customize the experience); companies now market other tools to create web pages, although even the most complicated page can be written entirely from a program like Windows Notepad. The adoption of specific protocols by the most common browsers explains why simple tools can create most web pages. Because Microsoft Edge, Firefox, Apple Safari, Chrome, and other browsers all interpret the same code in more or less the same way, creating web pages is as simple as learning how to speak the language of these browsers.

In 1991, the same year that Berners-Lee created his web browser, the Internet connection service Q-Link renamed itself America Online, or AOL for short. This service would eventually grow to employ over 20,000 people, enabling Internet access (and, critically, making it simple) for anyone with a telephone line. Although the web in 1991 looked and performed differently than it does today, AOL’s software allowed its users to create communities based on just about any subject, and it only required a dial-up modem—a device that connects any computer to the Internet via a telephone line—and a telephone line.

In addition, AOL incorporated two technologies—chat rooms and Instant Messenger—into a single program (along with a web browser). Chat rooms allowed many users to type live messages to a “room” full of people, while Instant Messenger allowed two users to communicate privately via text-based messages. AOL encapsulated all these once-disparate programs into a single user-friendly bundle. Although AOL later became disparaged for customer service issues, such as its users’ inability to deactivate their service, it played an instrumental role in bringing the Internet to mainstream users (Zeller Jr., 2005).

In contrast to AOL’s proprietary services, users needed to access the World Wide Web through a standalone web browser. First came the program Mosaic, released by the National Center for Supercomputing Applications at the University of Illinois, which gained popularity quickly due to its free cost and features that are now integral to the web. Features like bookmarks, which enable users to save the location of specific pages without needing to remember them, and images, now an integral part of the web, were introduced on Mosaic and made the web more usable for many people (National Center for Supercomputing Applications).

Although developers have not updated the web browser Mosaic since 1997, those who worked on it went on to create Netscape Navigator, an extremely popular browser during the 1990s. AOL later acquired the Netscape company, and it discontinued the Navigator browser in 2008. This decision was primarily due to Netscape Navigator’s market share being overtaken by Microsoft’s now-defunct Internet Explorer web browser, which came preloaded on Microsoft’s ubiquitous Windows operating system. However, Netscape had converted its Navigator software into an open-source program called Mozilla Firefox (see table below). Firefox represents about a quarter of the market—not bad, considering its lack of advertising and Microsoft’s natural advantage of packaging Microsoft’s Edge or Apple’s Opera with the majority of personal computers.

|

Total |

Total Market Share |

|---|---|

|

Microsoft Internet Explorer |

62.12% |

|

Firefox |

24.43% |

|

Chrome |

5.22% |

|

Safari |

4.53% |

|

Opera |

2.38% |

For Sale: The Web

As web browsers became more available as a less-moderated alternative to AOL’s proprietary service, the web became something like a free-for-all for startup companies. The web of this period, often referred to as Web 1.0 or the “dot-com boom,” featured many specialty sites that used the Internet’s ability for global, instantaneous communication to create a new type of business. During the boom, it seemed as if almost anyone could build a website and sell it for millions of dollars. However, the “dot-com crash” that occurred later that decade seemed to suggest otherwise. Several of these Internet startup companies went bankrupt, taking their shareholders down with them. Alan Greenspan, then the chairman of the U.S. Federal Reserve, called this phenomenon “irrational exuberance (Greenspan, 1996), in large part because investors did not necessarily know how to analyze these particular business plans, and companies that had never turned a profit could suddenly sell for millions. The new business models of the Internet may have done well in the stock market, but that did not mean they engaged in sustainable practices. In many ways, investors collectively failed to analyze the business prospects of these companies. Once they realized their mistakes (and the companies went bankrupt), much of the recent market growth evaporated. The invention of new technologies can bring with it the belief that old business tenets no longer apply. Still, this dangerous belief-the “irrational exuberance” Greenspan spoke of—does not necessarily lead to long-term growth.

Some lucky dot-com businesses that formed during the boom survived the crash and are still operating today. For example, eBay, with its online auctions, turned what seemed like a dangerous practice (sending money to a stranger over the Internet) into a daily occurrence. A less-fortunate company, eToys.com, started promising—its stock quadrupled on the day it went public in 1999—but then filed for Chapter 11 “The Internet and Social Media” bankruptcy in 2001 (Barnes, 2001).

One of these startups, theGlobe.com, provided one of the earliest social networking services that quickly gained popularity. When the Globe.com went public, its stock price surged from a target of $9 to a close of $63.50 per share (Kawamoto, 1998). The site started in 1995, building its business on advertising. As skepticism about the dot-com boom grew and advertisers became increasingly skittish about the value of online ads, The Globe.com ceased making profits and shut its doors as a social networking site (The Globe, 2009). Although advertising remains pervasive on the Internet today, the current model—primarily based on the highly targeted Google AdSense service—did not come around until much later. In the earlier dot-com years, the same ad might appear on thousands of different web pages. In contrast, now, advertisers often target their ads specifically to the content of an individual page.

However, that did not spell the end of social networking on the Internet. Social networking had existed since at least the invention of Usenet in 1979 (detailed later in the chapter). Still, all social networks up to that point ran into the same problem: profitability. This model of free access to user-generated content departed from almost anything previously seen in media, and pursuing ways to increase revenue streams would need to incorporate equally radical ways of thinking.

The Early Days of Social Media

The shared, generalized protocols of the Internet have enabled it to adapt and extend into various facets of everyday life easily. The Internet shapes everything, from day-to-day routines—the ability to read newspapers from around the world, for example—to the way we conduct research and collaboration. The Internet has changed three essential aspects of communication, and these have instigated profound changes in the way humans connect socially: the speed of information, the volume of information, and the “democratization” of publishing, or the ability of anyone to publish ideas on the web.

One of the internet’s most significant and revolutionary changes has occurred through social networking. Because of X (formerly Twitter), people can now see what all their friends do in real time; because of blogs, they can consider the opinions of strangers who may never write in traditional print; and because of Facebook, they can find people they have not talked to for decades, all without making a single awkward telephone call.

Recent years have seen an explosion of new content and services, although the phrase “social media” now encompasses websites and apps such as Facebook, X, Instagram, TikTok, Snapchat, and YouTube.

How Did We Get Here? The Late 1970s, Early 1980s, and Usenet

Almost as soon as TCP stitched the various networks together, a former DARPA scientist named Larry Roberts founded the company Telnet, the first commercial packet-switching company. Two years later, in 1977, the invention of the dial-up modem (in combination with the broader availability of personal computers like the Apple II) made it possible for anyone around the world to access the Internet. With availability extended beyond purely academic and military circles, the Internet quickly became a staple for computer hobbyists.

The spread of the Internet to hobbyists led to the founding of Usenet. In 1979, University of North Carolina graduate students Tom Truscott and Jim Ellis connected three computers in a small network. They used a series of programming scripts to post and receive messages. In a remarkably short period, this system spread rapidly across the burgeoning Internet. Much like an electronic version of community bulletin boards, anyone with a computer could post a topic or reply on Usenet.

The fundamentally and explicitly anarchic group outlined its intentions by posting “What is Usenet?” This document says, “Usenet is not a democracy…there is no person or group in charge of Usenet …Usenet cannot be a democracy, autocracy, or any other kind of ‘-acy (Moraes, et. al., 1998).’” Usenet proved helpful not only for socializing but also for collaboration and sharing information. In some ways, the service allowed a new kind of collaboration that seemed like the start of a revolution: “I was able to join rec.kites and collectively people in Australia and New Zealand helped me solve a problem and get a circular two-line kite to fly,” one user told the United Kingdom’s Guardian (Jeffery, et. al., 2009).

GeoCities: Yahoo! Pioneers

Fast-forward to 1995: The president and founder of Beverly Hills Internet, David Bohnett, announces that his company, “GeoCities,” would allow users (“homesteaders”) to create web pages in “communities” for free, with the stipulation that the company would place a small advertising banner at the top of each page. Anyone could register a GeoCities site and subsequently build a web page about a topic. Almost all community names, such as Broadway (live theater) and Athens (philosophy and education), focused on specific topics (Archive, 1996).

The concept of centering communities on specific topics may have originated from Usenet. In Usenet, the domain alt.rec.Kites refers to a particular topic (kites) within a category (recreation) within a larger community (alternative topics). This hierarchical model allowed users to organize themselves across the vastness of the Internet, even on a large site like GeoCities. GeoCities, however, allowed its users to do much more than post only text (the limitation of Usenet), while constraining them to a relatively small pool of resources. However, each GeoCities user had only a few megabytes of web space, and standardized pictures—such as mailbox icons and back buttons—were hosted on GeoCities’s central server. GeoCities represented such a large part of the Internet, and these standard icons became so ubiquitous that they have now become a veritable part of the Internet’s cultural history. The Web Elements category on the Internet Archaeology site offers a good example of how pervasive GeoCities graphics became (Internet Archaeology, 2010).

GeoCities built its business on a freemium model, where users enjoy free basic services but subscribers pay extra for things like commercial pages or shopping carts. Other Internet businesses, such as Skype and Flickr, employ the same model to maintain a vast user base while still profiting from frequent users. Since the loss of online advertising revenue heavily contributed to the dot-com crash, many current web startups are turning toward the freemium model to diversify their income streams (Miller, 2009).

GeoCities’ model proved so successful that the company Yahoo! bought it for $3.6 billion at its peak in 1999. At the time, GeoCities ranked as the third-most-visited site on the web (behind Yahoo! and AOL), so it seemed like a sure bet. A decade later, on October 26, 2009, Yahoo! closed GeoCities for good in every country except Japan.

Diversification of revenue has become one of the most crucial elements of Internet businesses; from The Wall Street Journal online to YouTube, almost every website now looks for multiple income streams to support its services.